In today’s data-driven world, organizations depend heavily on efficient data pipelines to transform raw information into valuable insights. ETL, which stands for Extract, Transform, and Load, is a foundational process used to collect data from various sources, reshape it into meaningful formats, and store it in data warehouses or analytics systems. However, as data volumes continue to grow rapidly, poorly optimized ETL pipelines can become slow, expensive, and unreliable. This is where ETL process optimization becomes essential.

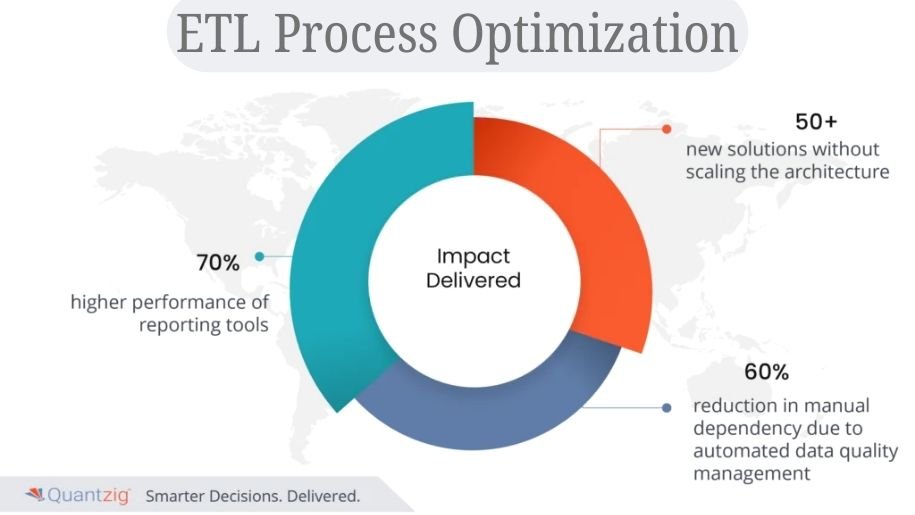

ETL process optimization refers to the strategies and techniques used to improve the performance, scalability, reliability, and efficiency of ETL workflows. Instead of simply moving data from one place to another, optimized pipelines focus on reducing processing time, minimizing resource consumption, and ensuring consistent data quality. Effective optimization not only accelerates analytics but also lowers operational costs and improves overall system performance.

Understanding the Core Components of ETL

Before exploring optimization techniques, it is important to understand the three core stages of ETL. The extraction stage involves collecting data from multiple sources such as databases, APIs, or files. Transformation involves cleaning, filtering, aggregating, and restructuring the extracted data to meet business requirements. Finally, the loading stage moves the processed data into a target system such as a data warehouse or analytics platform.

Each of these stages can introduce performance bottlenecks. Extraction may struggle with slow source queries, transformation may become resource-intensive due to complex calculations, and loading may cause delays because of inefficient database operations. Optimization requires analyzing each stage individually while also considering the pipeline as a whole.

Why ETL Optimization Matters

Modern organizations generate massive volumes of structured and unstructured data. Without optimization, ETL pipelines can suffer from long execution times, high infrastructure costs, and poor scalability. Delays in data processing can lead to outdated dashboards and inaccurate decision-making.

Optimized ETL pipelines improve data freshness by reducing latency, allowing businesses to respond to changes more quickly. They also enhance system reliability by minimizing failures caused by resource overload or inefficient logic. Another critical advantage is cost efficiency, especially in cloud environments where compute resources are billed based on usage. By optimizing data workflows, organizations can process more data with fewer resources.

Challenges That Lead to Poor ETL Performance

Many ETL pipelines become inefficient over time due to design choices that were initially suitable for smaller datasets. One common issue is full data reloads, where the entire dataset is processed during every run instead of only new or updated records. Another challenge is excessive data transformation logic, particularly when transformations are performed row by row rather than in batches.

Data quality problems can also slow down pipelines, as inconsistent or incomplete data requires additional processing. In some cases, pipelines are built without proper monitoring, making it difficult to identify performance bottlenecks. As systems scale, these inefficiencies compound, resulting in longer runtimes and higher costs.

Extraction Optimization Techniques

Optimizing the extraction phase begins with reducing the amount of unnecessary data being pulled from source systems. Selecting only required columns and applying filters at the source level can significantly reduce data transfer time. Efficient query design plays a major role in extraction performance, especially when working with relational databases.

Incremental extraction is one of the most impactful optimization methods. Instead of extracting the entire dataset each time, pipelines track changes using timestamps or unique identifiers. This approach reduces processing overhead and ensures faster pipeline execution. Parallel extraction can also improve performance by splitting workloads across multiple threads or nodes, enabling faster data retrieval from large systems.

Transformation Optimization Strategies

The transformation stage is often the most resource-intensive part of ETL. Complex calculations, joins, and data cleansing operations can consume significant compute power. One effective optimization approach is pushing transformations closer to the database engine or data warehouse. Modern databases are highly optimized for large-scale operations and can process transformations more efficiently than application-level scripts.

Batch processing is another key strategy. Processing data in large batches rather than individual rows reduces computational overhead and improves performance. Simplifying transformation logic by eliminating unnecessary calculations or redundant steps can further enhance efficiency. Additionally, using in-memory processing frameworks can accelerate transformations by reducing disk I/O operations.

Load Optimization and Data Storage Efficiency

Loading data into the target system requires careful planning to avoid bottlenecks. Bulk loading methods are typically faster than inserting records individually because they reduce transaction overhead. Using staging tables allows data to be loaded quickly before being merged into production tables.

Database indexing and partitioning can significantly improve load performance and query efficiency. Partitioning divides large datasets into smaller segments, making it easier to manage and retrieve data. However, excessive indexing during load operations can slow down performance, so some pipelines temporarily disable indexes during large loads and rebuild them afterward.

Architectural Approaches to ETL Optimization

Beyond individual techniques, the overall architecture of an ETL system plays a critical role in performance. Traditional ETL pipelines often perform heavy transformations before loading data into a warehouse. Modern architectures increasingly adopt ELT approaches, where data is loaded first and transformed within the warehouse using powerful processing engines.

Cloud-native architectures provide additional optimization opportunities through auto-scaling and distributed processing. Serverless computing models allow pipelines to dynamically allocate resources based on workload demand, reducing costs during low-usage periods. Event-driven architectures also improve efficiency by triggering pipelines only when new data becomes available, rather than running on fixed schedules.

The Role of Automation and Monitoring

Monitoring and automation are essential components of ETL optimization. Without visibility into pipeline performance, it becomes difficult to identify inefficiencies. Metrics such as execution time, resource usage, error rates, and throughput help engineers understand how pipelines behave under different workloads.

Automated alerting systems can notify teams when pipelines fail or exceed performance thresholds. Over time, historical performance data can be used to fine-tune scheduling and resource allocation. Some advanced systems use machine learning to predict bottlenecks and automatically adjust pipeline configurations.

Data Quality and Governance in Optimized ETL

Optimization is not only about speed; it also involves maintaining high data quality and governance standards. Poor-quality data can lead to repeated transformations, manual corrections, and inconsistent results. Implementing data validation rules early in the pipeline helps reduce downstream processing.

Metadata management also plays a role in optimization by providing context about data sources, schemas, and transformation logic. Well-documented pipelines are easier to maintain and optimize because engineers can quickly identify inefficient processes or outdated logic.

Common Tools and Technologies Supporting Optimization

Many modern data engineering tools are designed with optimization in mind. Distributed processing frameworks enable parallel computation, while workflow orchestration tools manage scheduling and dependencies between tasks. Data warehouses offer advanced query optimization features that accelerate transformation and loading processes.

Choosing the right tools depends on factors such as data volume, latency requirements, and organizational infrastructure. Regardless of the technology stack, the principles of optimization remain consistent: minimize unnecessary work, leverage scalable systems, and continuously monitor performance.

Future Trends in ETL Optimization

As data ecosystems evolve, ETL optimization continues to incorporate new technologies and methodologies. Real-time data processing is becoming increasingly popular, allowing organizations to analyze events as they happen rather than relying on batch processing. Artificial intelligence is also influencing pipeline optimization by enabling automated tuning and predictive resource management.

Another emerging trend is data mesh architecture, which distributes data ownership across teams while maintaining standardized pipelines. This approach encourages scalable and modular ETL design, reducing bottlenecks caused by centralized systems.

Conclusion

ETL process optimization is a critical discipline for modern data engineering. As organizations generate larger volumes of data, efficient pipelines become essential for delivering timely insights and maintaining cost-effective operations. By optimizing extraction, transformation, and loading stages, engineers can significantly improve performance and scalability.

Successful optimization requires a combination of technical strategies and architectural planning. Incremental loading, efficient transformations, bulk loading, and cloud-native designs all contribute to faster and more reliable pipelines. Continuous monitoring and automation ensure that pipelines remain efficient as data volumes grow. Ultimately, optimized ETL processes empower organizations to unlock the full value of their data while maintaining flexibility and resilience in an ever-changing technological landscape.

Frequently Asked Questions (FAQs)

What is ETL process optimization in simple terms?

ETL process optimization involves improving the speed, efficiency, and reliability of data pipelines by refining how data is extracted, transformed, and loaded into storage systems.

Why do ETL pipelines become slow over time?

Pipelines often slow down due to increasing data volume, inefficient queries, unnecessary transformations, or outdated architecture that was not designed for large-scale processing.

What is the biggest factor that improves ETL performance?

Incremental processing is one of the most impactful improvements because it reduces the need to process entire datasets repeatedly and focuses only on new or changed data.

How does cloud computing help with ETL optimization?

Cloud platforms provide scalable resources, automated infrastructure management, and distributed processing capabilities, which help pipelines run faster and more efficiently.

Is ETL optimization only important for large companies?

No, even small organizations benefit from optimized pipelines because efficient data processing reduces costs, improves analytics accuracy, and ensures reliable system performance.